DoWhy: Different estimation methods for causal inference

This is a quick introduction to the DoWhy causal inference library. We will load in a sample dataset and use different methods for estimating the causal effect of a (pre-specified)treatment variable on a (pre-specified) outcome variable.

We will see that not all estimators return the correct effect for this dataset.

First, let us add the required path for Python to find the DoWhy code and load all required packages

[1]:

%load_ext autoreload

%autoreload 2

[2]:

import numpy as np

import pandas as pd

import logging

import dowhy

from dowhy import CausalModel

import dowhy.datasets

Now, let us load a dataset. For simplicity, we simulate a dataset with linear relationships between common causes and treatment, and common causes and outcome.

Beta is the true causal effect.

[3]:

data = dowhy.datasets.linear_dataset(beta=10,

num_common_causes=5,

num_instruments = 2,

num_treatments=1,

num_samples=10000,

treatment_is_binary=True,

outcome_is_binary=False,

stddev_treatment_noise=10)

df = data["df"]

df

[3]:

| Z0 | Z1 | W0 | W1 | W2 | W3 | W4 | v0 | y | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 1.0 | 0.434043 | 0.760717 | -1.032926 | -0.091354 | -1.690210 | 1.650846 | True | 12.790439 |

| 1 | 0.0 | 0.294732 | -1.701894 | -2.564533 | 0.433172 | -0.643343 | 1.143627 | False | -11.505611 |

| 2 | 1.0 | 0.653284 | -0.831432 | -0.311641 | 0.331886 | 0.721425 | 0.594511 | True | 10.996468 |

| 3 | 0.0 | 0.807549 | -1.605905 | 0.621894 | 0.261284 | 0.514066 | -1.275510 | True | 2.538622 |

| 4 | 1.0 | 0.750570 | 0.215663 | 0.176927 | -0.729734 | -1.959675 | 2.896418 | True | 18.498534 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 9995 | 1.0 | 0.914790 | 0.444008 | -2.476697 | -0.544444 | -0.492474 | -0.717574 | True | -5.603174 |

| 9996 | 0.0 | 0.417227 | -1.738763 | 0.380703 | -0.376255 | 0.091770 | 1.556368 | False | 0.777810 |

| 9997 | 1.0 | 0.625125 | 1.102980 | -0.415366 | -0.094656 | -0.683668 | 2.551121 | True | 22.810609 |

| 9998 | 0.0 | 0.174891 | -0.244618 | -2.119600 | -0.103280 | 1.130774 | -0.996929 | False | -13.183657 |

| 9999 | 0.0 | 0.301960 | -1.872290 | -1.327269 | 1.552263 | -0.389060 | 0.502846 | False | -4.480102 |

10000 rows × 9 columns

Note that we are using a pandas dataframe to load the data.

Identifying the causal estimand

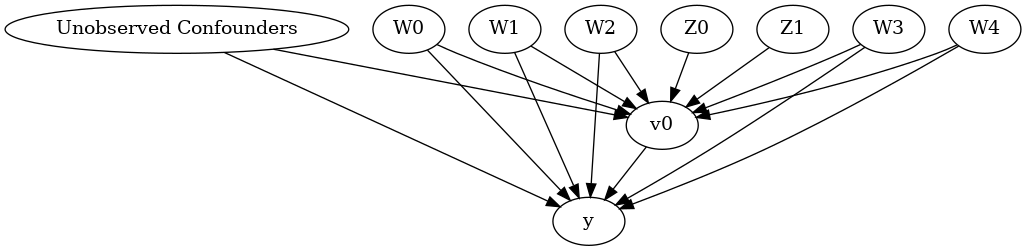

We now input a causal graph in the DOT graph format.

[4]:

# With graph

model=CausalModel(

data = df,

treatment=data["treatment_name"],

outcome=data["outcome_name"],

graph=data["gml_graph"],

instruments=data["instrument_names"]

)

[5]:

model.view_model()

[6]:

from IPython.display import Image, display

display(Image(filename="causal_model.png"))

We get a causal graph. Now identification and estimation is done.

[7]:

identified_estimand = model.identify_effect(proceed_when_unidentifiable=True)

print(identified_estimand)

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|W1,W3,W0,W4,W2))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W1,W3,W0,W4,W2,U) = P(y|v0,W1,W3,W0,W4,W2)

### Estimand : 2

Estimand name: iv

Estimand expression:

Expectation(Derivative(y, [Z0, Z1])*Derivative([v0], [Z0, Z1])**(-1))

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→{Z0,Z1})

Estimand assumption 2, Exclusion: If we remove {Z0,Z1}→{v0}, then ¬({Z0,Z1}→y)

### Estimand : 3

Estimand name: frontdoor

No such variable found!

Method 1: Regression

Use linear regression.

[8]:

causal_estimate_reg = model.estimate_effect(identified_estimand,

method_name="backdoor.linear_regression",

test_significance=True)

print(causal_estimate_reg)

print("Causal Estimate is " + str(causal_estimate_reg.value))

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|W1,W3,W0,W4,W2))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W1,W3,W0,W4,W2,U) = P(y|v0,W1,W3,W0,W4,W2)

## Realized estimand

b: y~v0+W1+W3+W0+W4+W2

Target units: ate

## Estimate

Mean value: 9.99993149913471

p-value: [0.]

Causal Estimate is 9.99993149913471

Method 2: Distance Matching

Define a distance metric and then use the metric to match closest points between treatment and control.

[9]:

causal_estimate_dmatch = model.estimate_effect(identified_estimand,

method_name="backdoor.distance_matching",

target_units="att",

method_params={'distance_metric':"minkowski", 'p':2})

print(causal_estimate_dmatch)

print("Causal Estimate is " + str(causal_estimate_dmatch.value))

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|W1,W3,W0,W4,W2))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W1,W3,W0,W4,W2,U) = P(y|v0,W1,W3,W0,W4,W2)

## Realized estimand

b: y~v0+W1+W3+W0+W4+W2

Target units: att

## Estimate

Mean value: 12.215841016301951

Causal Estimate is 12.215841016301951

Method 3: Propensity Score Stratification

We will be using propensity scores to stratify units in the data.

[10]:

causal_estimate_strat = model.estimate_effect(identified_estimand,

method_name="backdoor.propensity_score_stratification",

target_units="att")

print(causal_estimate_strat)

print("Causal Estimate is " + str(causal_estimate_strat.value))

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|W1,W3,W0,W4,W2))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W1,W3,W0,W4,W2,U) = P(y|v0,W1,W3,W0,W4,W2)

## Realized estimand

b: y~v0+W1+W3+W0+W4+W2

Target units: att

## Estimate

Mean value: 10.07197614004532

Causal Estimate is 10.07197614004532

/home/amit/py-envs/env3.8/lib/python3.8/site-packages/sklearn/utils/validation.py:72: DataConversionWarning: A column-vector y was passed when a 1d array was expected. Please change the shape of y to (n_samples, ), for example using ravel().

return f(**kwargs)

Method 4: Propensity Score Matching

We will be using propensity scores to match units in the data.

[11]:

causal_estimate_match = model.estimate_effect(identified_estimand,

method_name="backdoor.propensity_score_matching",

target_units="atc")

print(causal_estimate_match)

print("Causal Estimate is " + str(causal_estimate_match.value))

/home/amit/py-envs/env3.8/lib/python3.8/site-packages/sklearn/utils/validation.py:72: DataConversionWarning: A column-vector y was passed when a 1d array was expected. Please change the shape of y to (n_samples, ), for example using ravel().

return f(**kwargs)

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|W1,W3,W0,W4,W2))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W1,W3,W0,W4,W2,U) = P(y|v0,W1,W3,W0,W4,W2)

## Realized estimand

b: y~v0+W1+W3+W0+W4+W2

Target units: atc

## Estimate

Mean value: 9.907232635069317

Causal Estimate is 9.907232635069317

Method 5: Weighting

We will be using (inverse) propensity scores to assign weights to units in the data. DoWhy supports a few different weighting schemes: 1. Vanilla Inverse Propensity Score weighting (IPS) (weighting_scheme=“ips_weight”) 2. Self-normalized IPS weighting (also known as the Hajek estimator) (weighting_scheme=“ips_normalized_weight”) 3. Stabilized IPS weighting (weighting_scheme = “ips_stabilized_weight”)

[12]:

causal_estimate_ipw = model.estimate_effect(identified_estimand,

method_name="backdoor.propensity_score_weighting",

target_units = "ate",

method_params={"weighting_scheme":"ips_weight"})

print(causal_estimate_ipw)

print("Causal Estimate is " + str(causal_estimate_ipw.value))

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|W1,W3,W0,W4,W2))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W1,W3,W0,W4,W2,U) = P(y|v0,W1,W3,W0,W4,W2)

## Realized estimand

b: y~v0+W1+W3+W0+W4+W2

Target units: ate

## Estimate

Mean value: 11.738985221505024

Causal Estimate is 11.738985221505024

/home/amit/py-envs/env3.8/lib/python3.8/site-packages/sklearn/utils/validation.py:72: DataConversionWarning: A column-vector y was passed when a 1d array was expected. Please change the shape of y to (n_samples, ), for example using ravel().

return f(**kwargs)

Method 6: Instrumental Variable

We will be using the Wald estimator for the provided instrumental variable.

[13]:

causal_estimate_iv = model.estimate_effect(identified_estimand,

method_name="iv.instrumental_variable", method_params = {'iv_instrument_name': 'Z0'})

print(causal_estimate_iv)

print("Causal Estimate is " + str(causal_estimate_iv.value))

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: iv

Estimand expression:

Expectation(Derivative(y, [Z0, Z1])*Derivative([v0], [Z0, Z1])**(-1))

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→{Z0,Z1})

Estimand assumption 2, Exclusion: If we remove {Z0,Z1}→{v0}, then ¬({Z0,Z1}→y)

## Realized estimand

Realized estimand: Wald Estimator

Realized estimand type: nonparametric-ate

Estimand expression:

-1

Expectation(Derivative(y, Z0))⋅Expectation(Derivative(v0, Z0))

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→{Z0,Z1})

Estimand assumption 2, Exclusion: If we remove {Z0,Z1}→{v0}, then ¬({Z0,Z1}→y)

Estimand assumption 3, treatment_effect_homogeneity: Each unit's treatment ['v0'] is affected in the same way by common causes of ['v0'] and y

Estimand assumption 4, outcome_effect_homogeneity: Each unit's outcome y is affected in the same way by common causes of ['v0'] and y

Target units: ate

## Estimate

Mean value: 9.385939768111946

Causal Estimate is 9.385939768111946

Method 7: Regression Discontinuity

We will be internally converting this to an equivalent instrumental variables problem.

[17]:

causal_estimate_regdist = model.estimate_effect(identified_estimand,

method_name="iv.regression_discontinuity",

method_params={'rd_variable_name':'Z1',

'rd_threshold_value':0.5,

'rd_bandwidth': 0.15})

print(causal_estimate_regdist)

print("Causal Estimate is " + str(causal_estimate_regdist.value))

local_rd_variable local_treatment local_outcome

0 0.434043 True 12.790439

6 0.461987 False -7.378561

7 0.611614 True 6.345427

8 0.634963 True 3.758775

11 0.369412 True 10.021046

... ... ... ...

9977 0.456288 False -2.630567

9978 0.508402 False -6.946172

9982 0.432213 True 8.446996

9996 0.417227 False 0.777810

9997 0.625125 True 22.810609

[2983 rows x 3 columns]

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: iv

Estimand expression:

Expectation(Derivative(y, [Z0, Z1])*Derivative([v0], [Z0, Z1])**(-1))

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→{Z0,Z1})

Estimand assumption 2, Exclusion: If we remove {Z0,Z1}→{v0}, then ¬({Z0,Z1}→y)

## Realized estimand

Realized estimand: Wald Estimator

Realized estimand type: nonparametric-ate

Estimand expression:

Expectation(Derivative(y, local_rd_variable))⋅Expectation(Derivative(v0, local

-1

_rd_variable))

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→{Z0,Z1})

Estimand assumption 2, Exclusion: If we remove {Z0,Z1}→{v0}, then ¬({Z0,Z1}→y)

Estimand assumption 3, treatment_effect_homogeneity: Each unit's treatment ['local_treatment'] is affected in the same way by common causes of ['local_treatment'] and local_outcome

Estimand assumption 4, outcome_effect_homogeneity: Each unit's outcome local_outcome is affected in the same way by common causes of ['local_treatment'] and local_outcome

Target units: ate

## Estimate

Mean value: 12.326948629676853

Causal Estimate is 12.326948629676853